A Brief Introduction to HappenVR

Our final report is available for download here.

January 10, 2017 | by: Si Te Feng

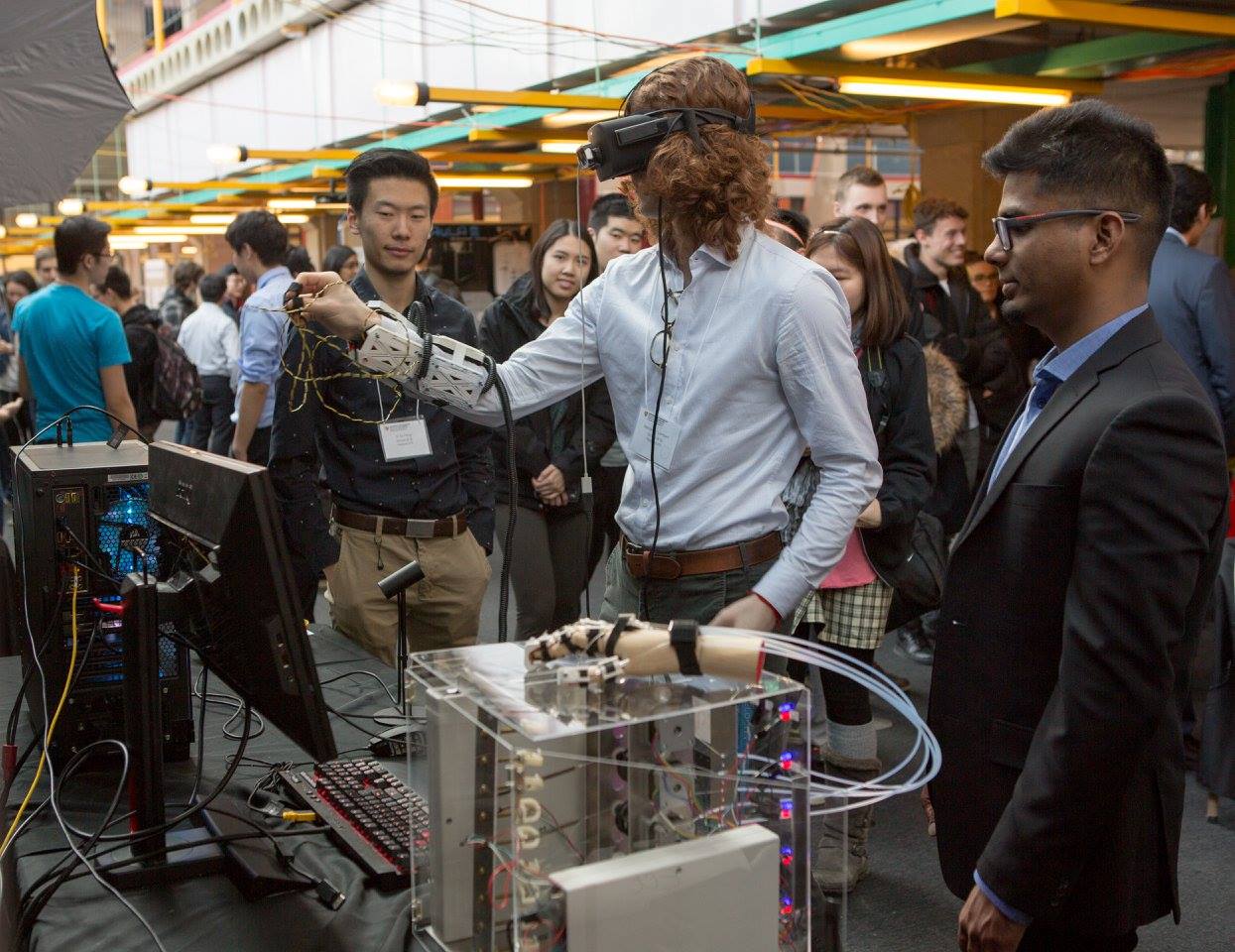

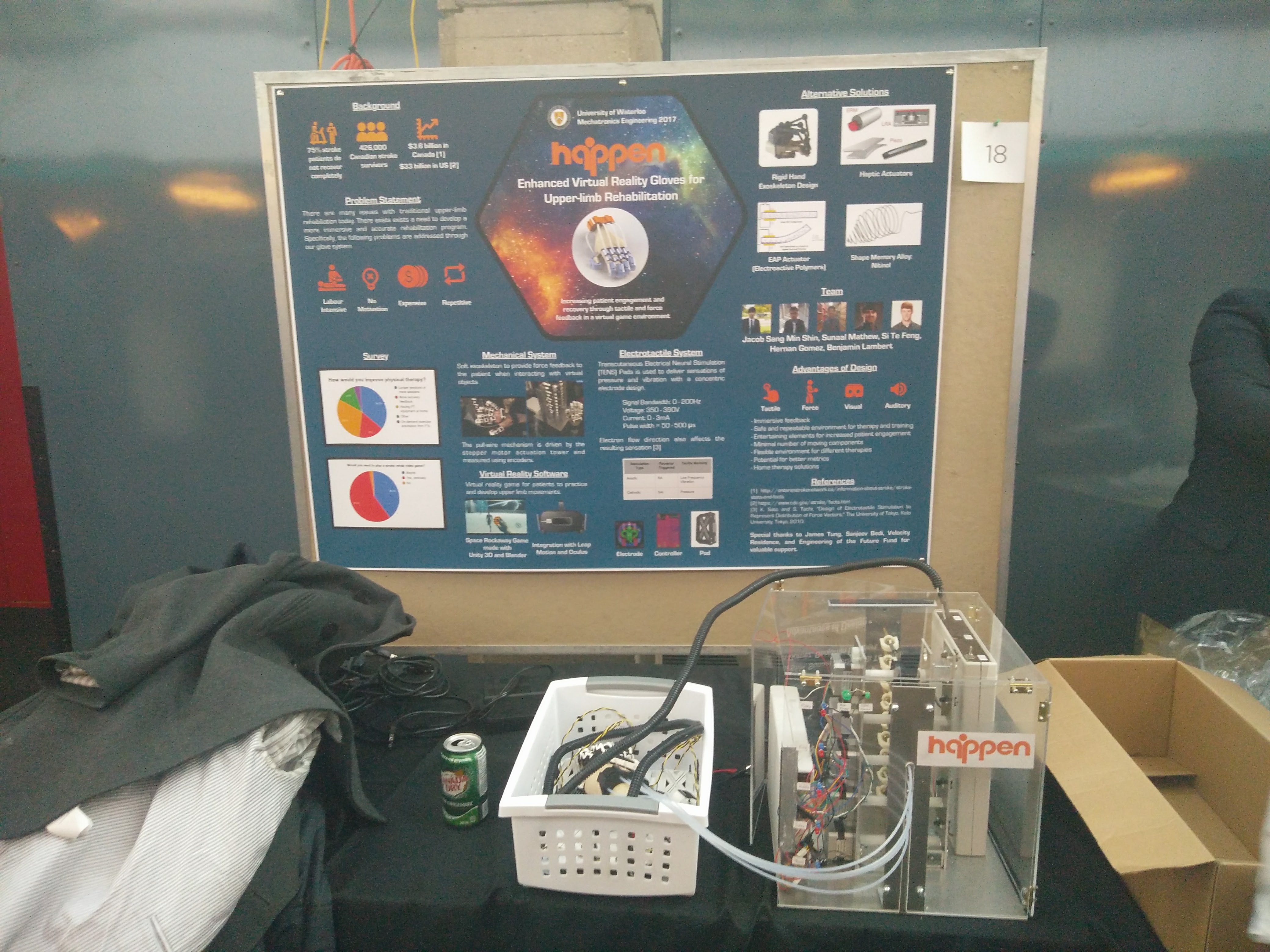

Stroke is a leading cause of adult disability in Canada as well as most countries around the world. There exists a need to improve conventional therapy since only 10% of stroke patients in Canada recover completely [1]. Studies have shown that by increasing the intensity and quantity of exercises, as well as improving patient engagement and motivation, stroke recovery can be improved. Team Happen aims to develop a Virtual Reality Haptic Glove that provides immersive stroke therapy, recovery metrics based on data from the user’s kinematics, and the ability to train independently at home.

The final solution must be functional for at least one hand, provide visual, force and tactile feedbacks while the patient interacts with a virtual environment, and must be safe to use. While considering potential solutions, the team used selection criteria including minimum interaction delay, as well as a high range of motion with minimal biomechanical impedance to ensure an immersive experience for the user. The final solution can be broadly divided into 4 systems which include force feedback, tactile feedback, motion tracking, and software for virtual reality and recovery metrics. Each of these systems is explored in this report, where each team member contributed to their respective section.

The force feedback system involves the use of a pull-wire soft exoskeleton that restricts the fingers through soft cables driven by stepper motors. This solution allows the user to experience force feedback and physical restrictions while interacting with virtual objects. In terms of tactile feedback, the final solution selected is the electrotactile system, where regulated current is passed through the skin of the fingertip to directly stimulate the nerve fibers connected to mechanoreceptors. This results in perception of tactile sensations like pressure, vibration, and skin stretch. This solution allows the user to experience the shape and texture of virtual objects, providing an immersive experience aimed at improving stroke therapy. The third system is the motion tracking system, which involves the use of Inertial Measurement Unit (IMU) sensors to track the position and orientation of the hand, as well as the pull-wire system coupled with rotary encoders to track finger joint angles. Lastly, the software system for virtual reality and recovery metrics involves Unity 3D graphics framework, a 3D model creation application called Blender, and the Arduino embedded platform. It provides a critical role in interfacing between sensors and actuators through digital control systems, while providing intelligent recovery tracking and expert metrics for stroke patients.

*[1] Ontario Stroke Network, "Stroke Stats and Facts," Cardiac Care Network, 2016. [Online]. Available: http://ontariostrokenetwork.ca/information-about- stroke/stroke-stats-and-facts/. [Accessed 4 December 2016].

HappenVR is Here to Improve the State of VR

February 16, 2017 | by: Si Te Feng

Conventional exercises for upper-limb rehabilitation involve the use of simple physical objects and devices, where the main sources of engagement and motivation are the therapists. This results in a high physical and cognitive load for the therapists and possible loss in engagement for patients performing these exercises repetitively. Modern therapy uses video games through devices like the Nintendo Wii, which provide patient engagement but are limited to the vibrational motor feedback for the user. Several haptic devices can provide better feedback, but are bulky, expensive and inextensible. Thanks to recent technology advancements, HappenVR is a thin glove that provides enhanced haptic feedback to promote patient engagement and recovery, while also reducing the physical and cognitive load on therapists.

The released of the Orion SDK by Leap Motion last year helped to make our glove thin and light. Users can now track their hands and use them to naturally interact with virtual objects. This significantly reduces the cost for hand tracking. In the meantime, the technique of direct electrical stimulation to produce the sensation of touch and pressure is a cost effective method that has shown signs of early success. Our team has since been putting our efforts on understanding and improving this technique by reading many related white papers and performing hands-on experiments with different electrodes and input signals. In short, these two timely new technologies gave our team the unique ability to transform the way that medical therapies are traditionally done.

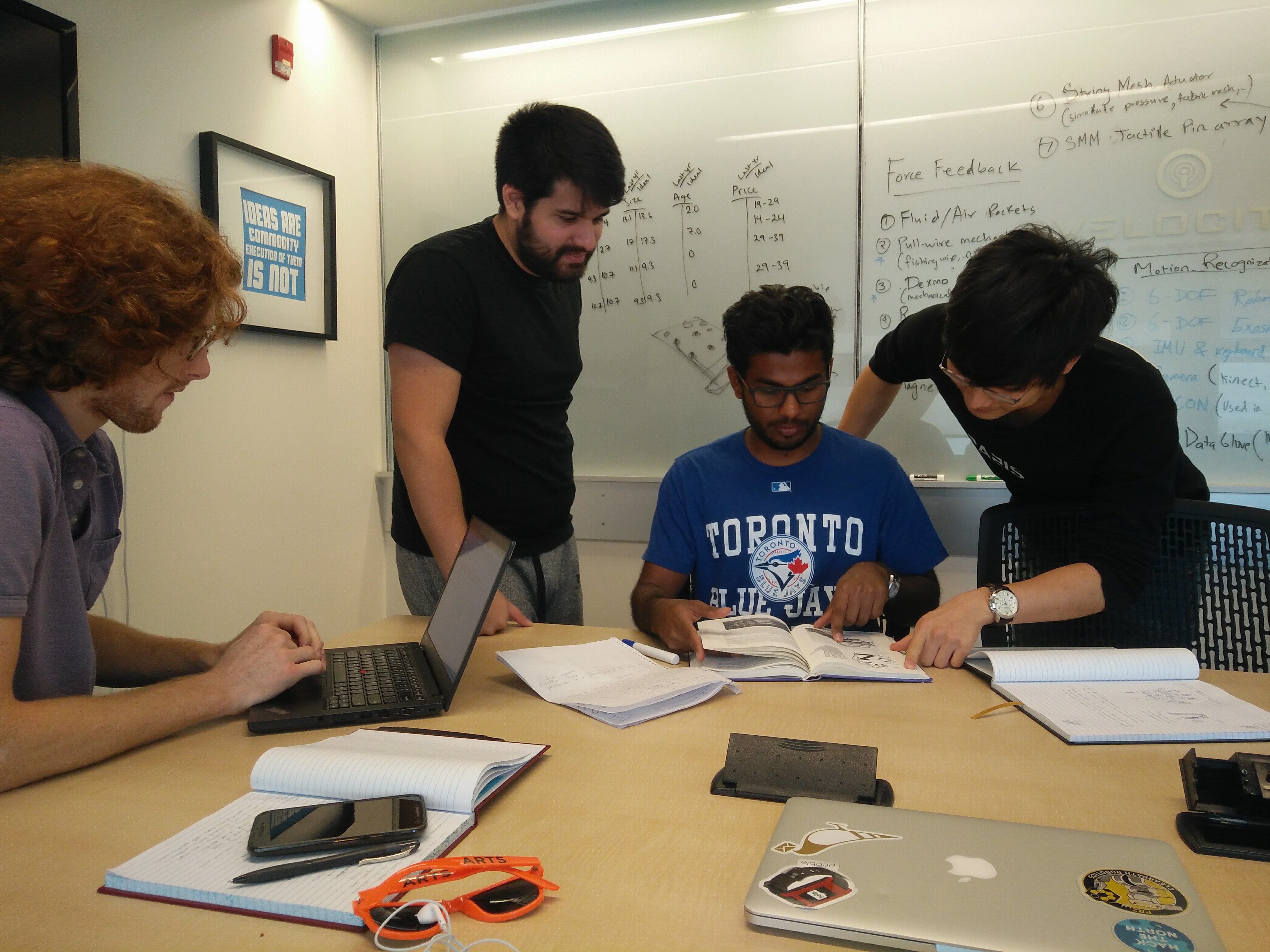

About Our Team

February 10, 2017 | by: Si Te Feng

Our team members have a wide range of engineering skills that are developed through years of industry experience, which enabled us to pursue this multidisciplinary project. We are Mechatronics engineering students that share the same fervid passion in improving the lives of patients. Above all, we are a team that jumps at the opportunities to take initiatives.

Our software lead Si Te has been an organizer of Hack the North for two years since its inception, which helped 1000 hackers from 80 countries to make the change they want to see in 36 hours. He has also led the outreach efforts as VP of projects at uwBlueprint.org, a student pro-bono software organization that helps local charities with technology. Besides cultivating an inclusive student technology community, Si Te is excited about software and wearables. He has previously contributed to the development of the Pebble 2 smartwatch, released mobile apps on the App Store for high school students, and participated in hackathons, winning grand prizes at Stanford University and University of Michigan.

Ben, our embedded design lead, brings expert biomedical hardware design experience to the team from his gesture measurement projects at Tesla, 3D human motion tracking hardware research at Nest Labs, and real time operating system platform bring-up for Kiwi Wearable Technologies. In addition to his embedded and hardware design leadership, Ben brings biomedical signal processing and neural system simulation expertise to HappenVR, allowing the company to develop cutting edge motion performance metrics for rehabilitation patients in need of our solution.

Sunaal is involved in the electrical and hardware design for HappenVR, and along with Ben, works on the electrotactile feedback system. Sunaal brings in experience from hardware design and engineering for a medical device startup, PCB design, software engineering, game development and user centred design experience from IDEO. He has experience working with bio-signal processing and sensor design for EMG and ECG applications. Sunaal also works on the customer discovery tasks for this project, and communicates with external companies, researchers and prospective customers to investigate the applications of this project.

Hernan, our mechanical design and manufacturing lead, brings expertise in the area of mechanical design and prototype development. Hernan gained this experience after working multiple co-op terms in R&D related positions from large companies to startups, including medical device companies such as Baylis Medical and Conavi Medical. During these co-op terms, Hernan had the chance to propose, develop and test various design alternatives throughout different development stages of electromechanical devices. In addition, Hernan is also experienced in the preparation of the 510k regulatory application for the US FDA, participated in animal trials, and completed quality assurance related tasks.

Jacob (Sang Min) is our system integration lead. He is involved with the software and works with Si Te on both software integration and the virtual reality game demo. Sang Min’s main area of expertise is in project management, but he also has experience in mechanical design and software engineering.He gained experiences in these areas from co-op placements in companies including BMW and Alcohol Countermeasure Systems. In some of these placements, Sang Min worked through the different stages of the project life cycle - particularly in documentation, planning, and presentation.

HappenVR TENS Mesh Design and Fabrication

February 7, 2017 | by: Si Te Feng

At the center of our enhanced virtual reality experience is the custom designed glove with tactile feedback system. Tactile feedback is the simulation of touch, so that patients can not only see and listen to 3D objects in the virtual environment, but also touch and feel the objects too. To achieve this, team Happen has been working dilligently to design a TENS (Transcutaneous Electrical Neural Stimulation) mesh that touches the inner sides of the fingers. The TENS mesh is envisioned to be a flexible and thin piece of PCB (printed circuit board) with circular gold plated electrodes that are around 1mm in diameter and 2mm apart. The TENS mesh simulates the touch sensation by delivering a tiny varying current (around 1-3 mA) with various frequency, phase, and current travel patterns.

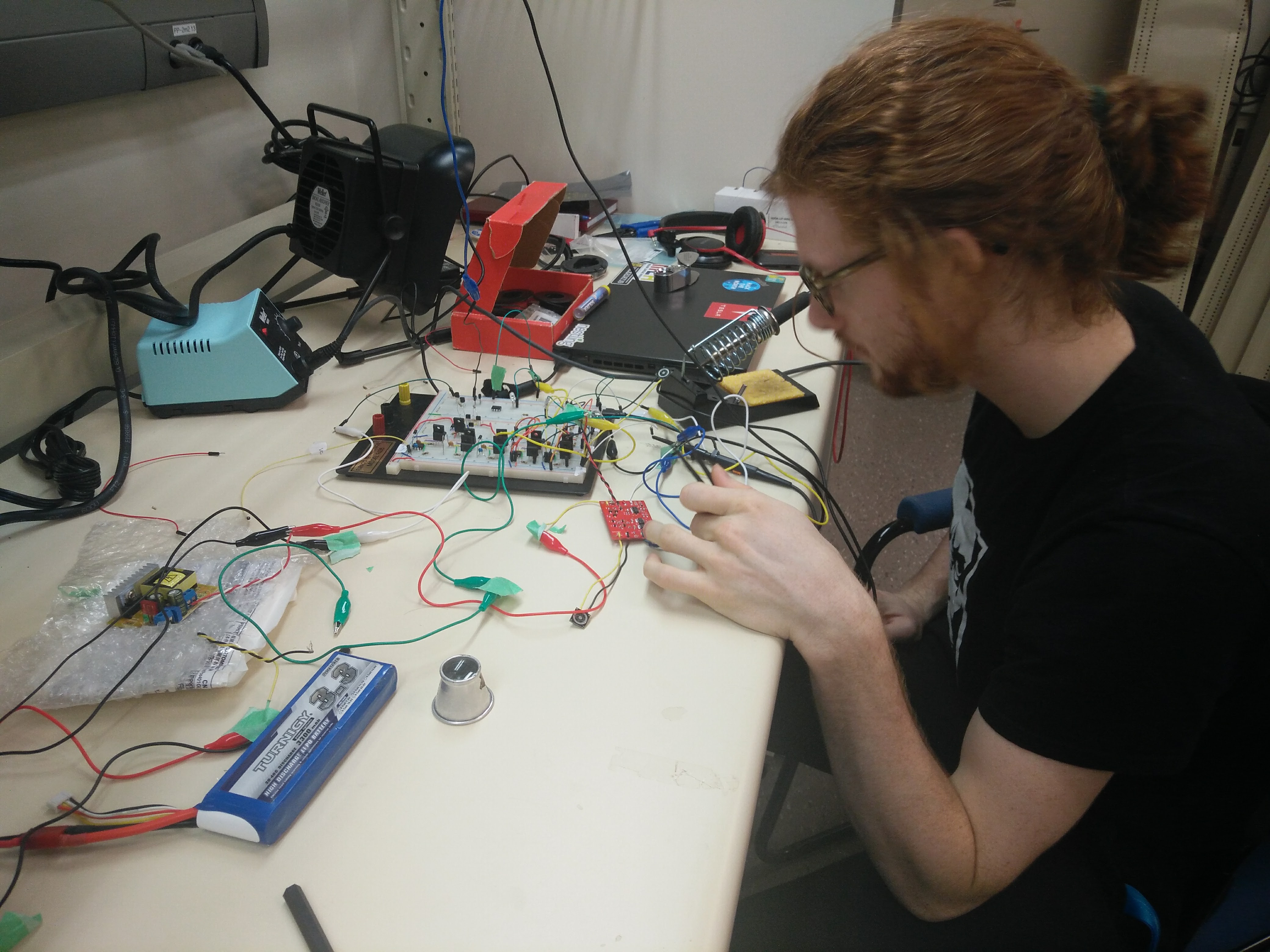

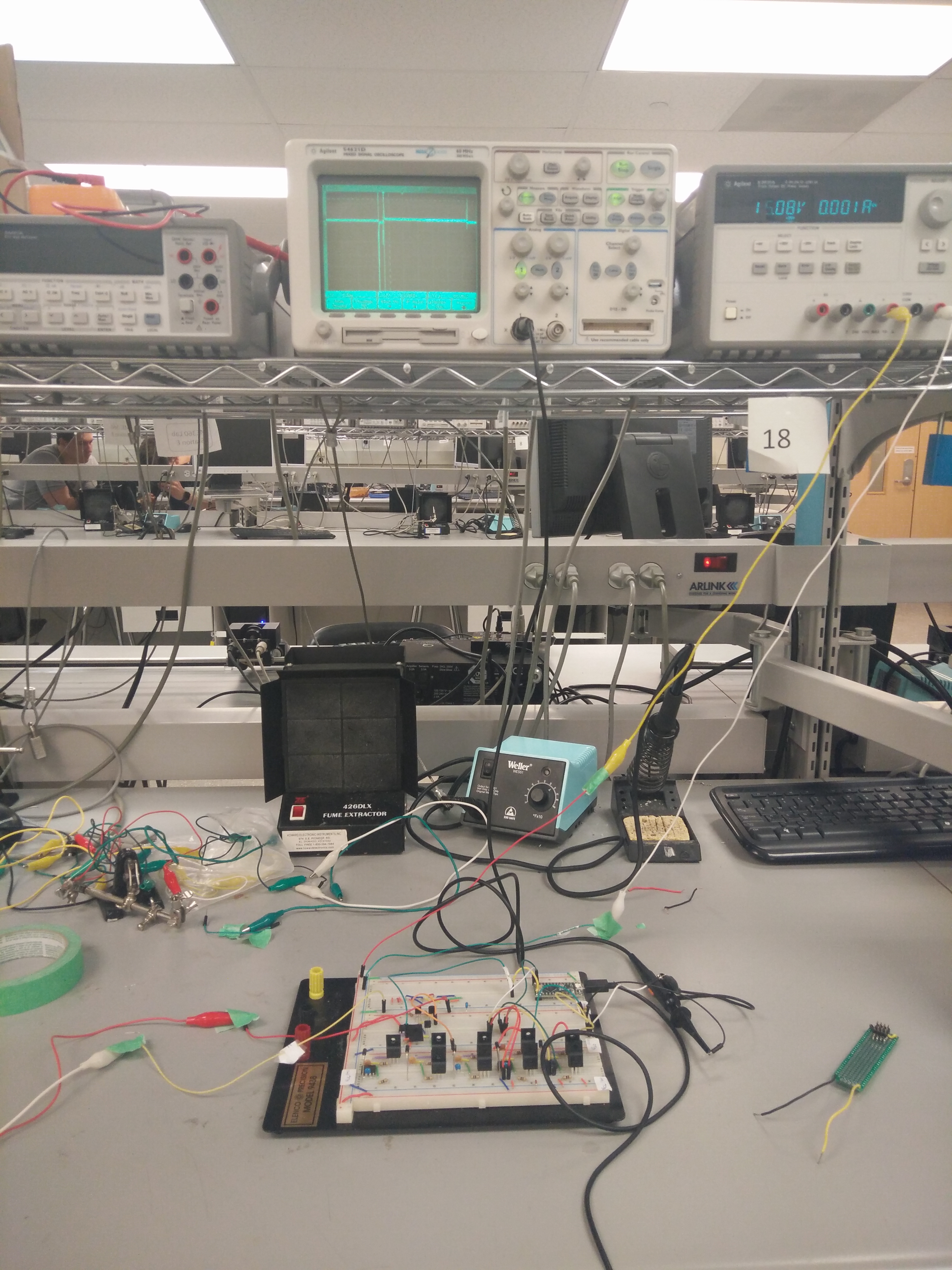

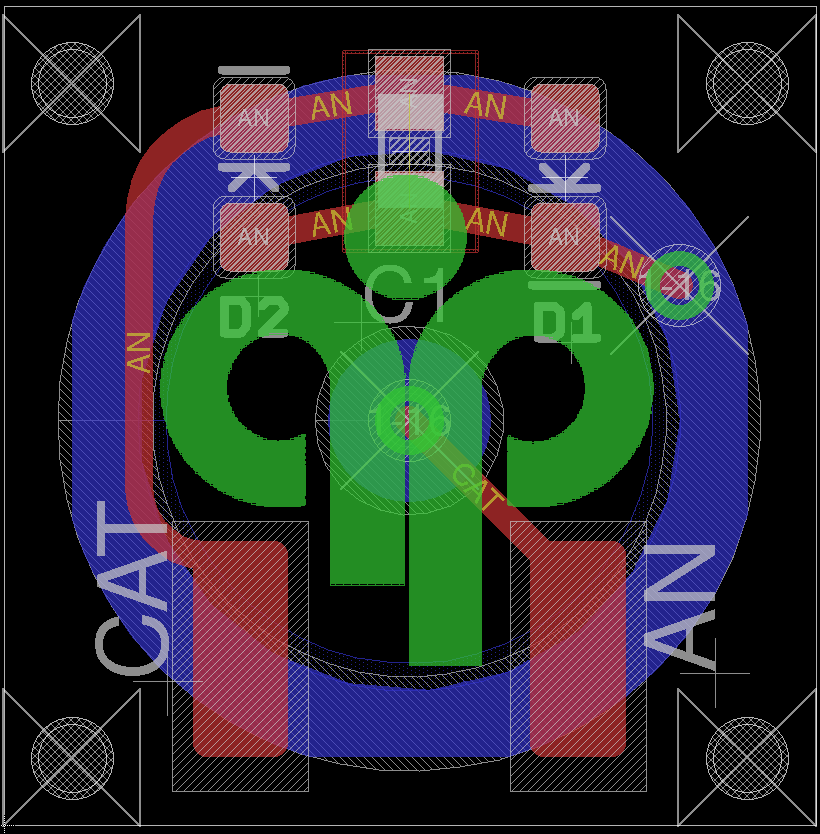

Yesterday, the electrical team has successfully produced both anodic and cathodic electrical stimulation from a 4 by 4 electrode grid to the finger for the first time. The words anodic and cathodic refer to the current travel direction. For Anodic stimulation, positive current travels from the inner electrode to the outer electrodes concentrically, whereas cathodic stimulation does the opposite. The production of the sensation is a huge milestone for the team as it validates almost all of the previous engineering work that went into the design of the TENS circuit. The reliable sensation was made possible by the steady current provided by the current regulation circuit that the team has been working on for the last 3 months. The resulting stimulation feels as though the electrodes are pushing up against the finger, when in fact, there are no physical movements. When the input signal is set to be a 100 μs period square wave, the resulting sensation is an occilating vertical force of around 12 Hz. The current understanding is that both increase in phase of the signal and output current would increase the force sensation. The photo below shows the testing setup from yesterday.

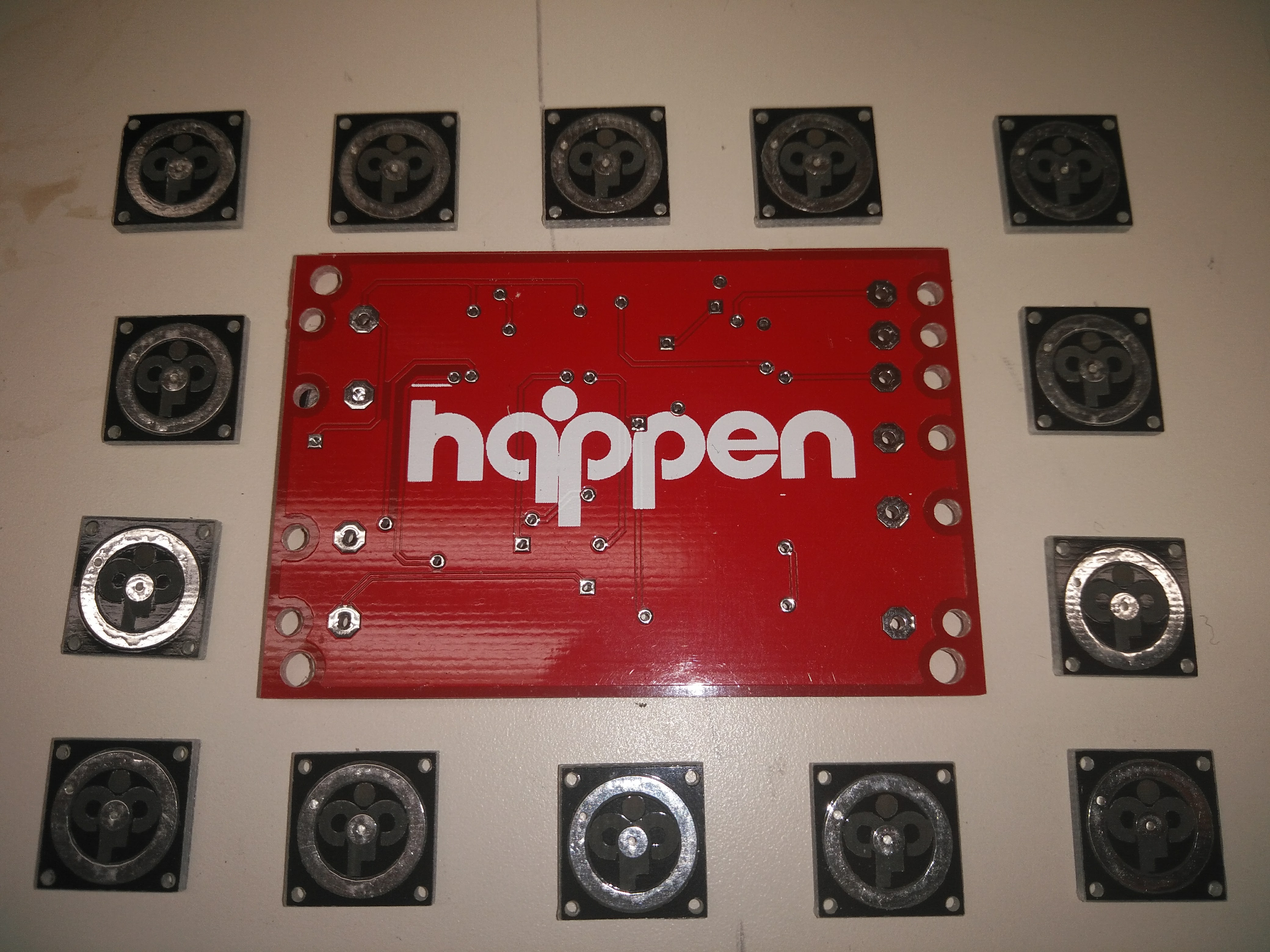

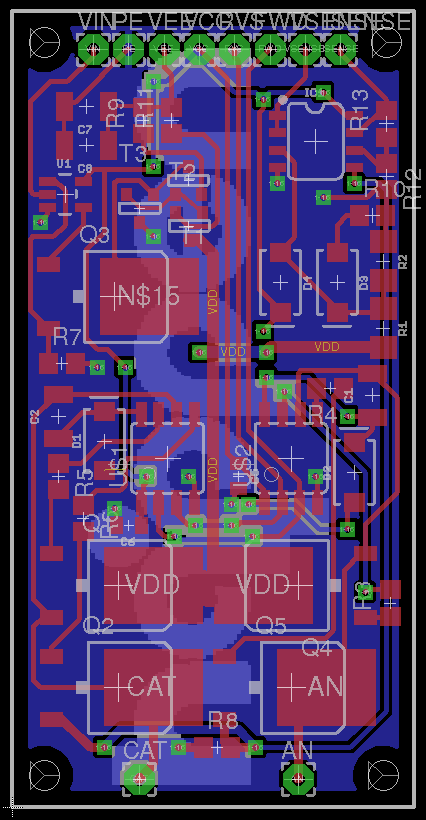

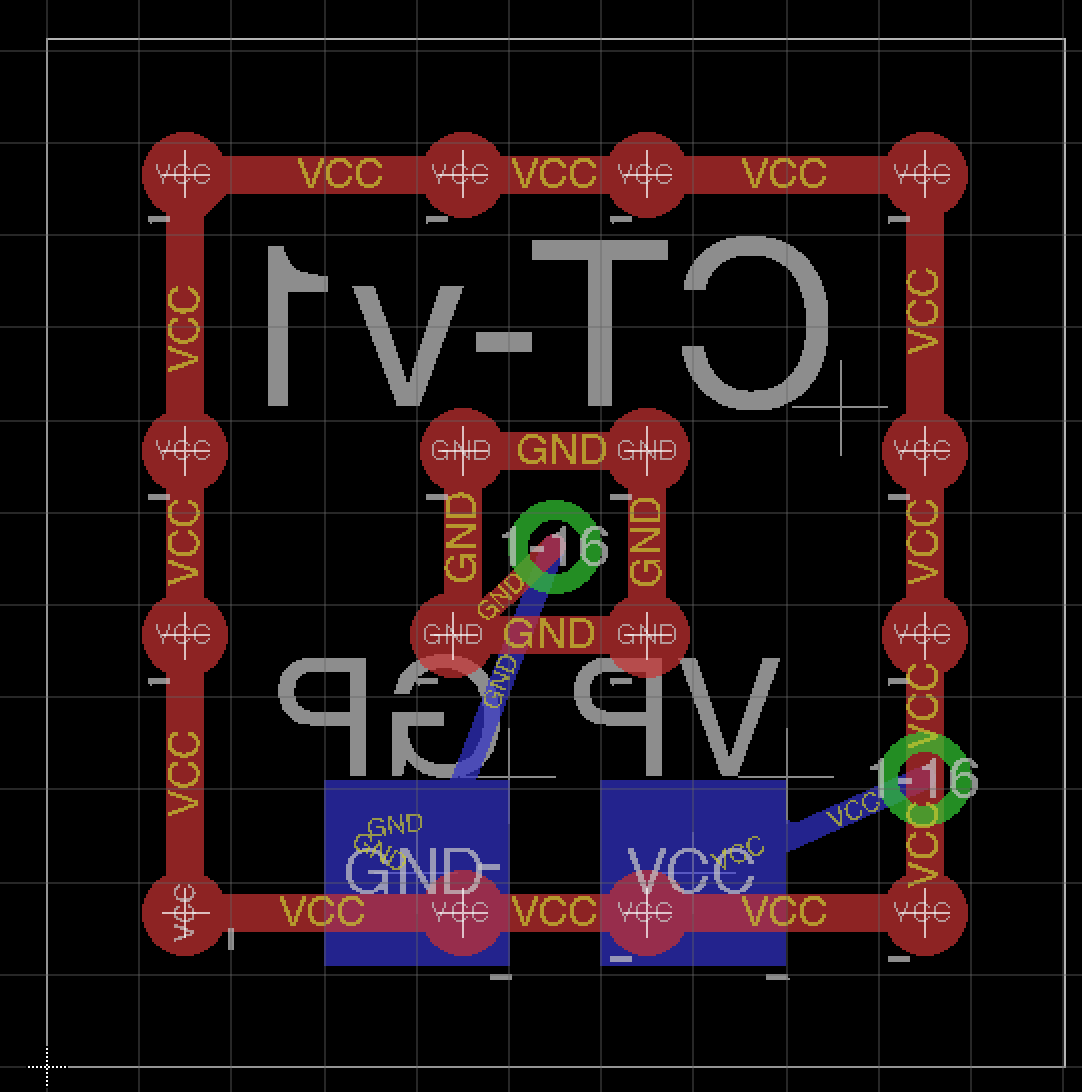

Now that the prototype circuit has been validated, the electrical team has decided that the next step is to start on the PCB manufacturing process and reselect the electrical components from breadboard DIP components to PCB SMT components. This is to drastically reduce the size of the electrical circuit in preparation of the Mechatronics Symposium demonstration. In addition, the team will also use EAGLE to design PCB for both the current regulation circuit and TENS mesh. The TENS mesh will be printed on a ridgid PCB as usual during prototyping stages, but will be eventually converted to a flexible design. In order to try out different configurations, 2 TENS PCB will be designed: a 3 by 3 electrode grid and a 4 by 4 electrode grid. Each TENS PCB will be placed under one segment of the finger with a total of 14 pieces. Additional pieces might be placed in the palm area as well.

TENS Mesh Driver PCB v1

4x4 TENS Pad PCB v1

Concentric TENS Pad PCB v2

The team plans on consolidating the design by the end of the week and send out the first batch of orders by next week. The PCBs will be ordered from PCBWay, which is a company that provides quality PCB printing services and fast shipping. We hope by quickly iterate though designs and trying many input signals with our virtual reality game, we can make a seemless tactile experience that will engage the patient at a level that has never been possible.

New Virtual Environment Prototype with Unity 3D

January 28, 2017 | by: Si Te Feng

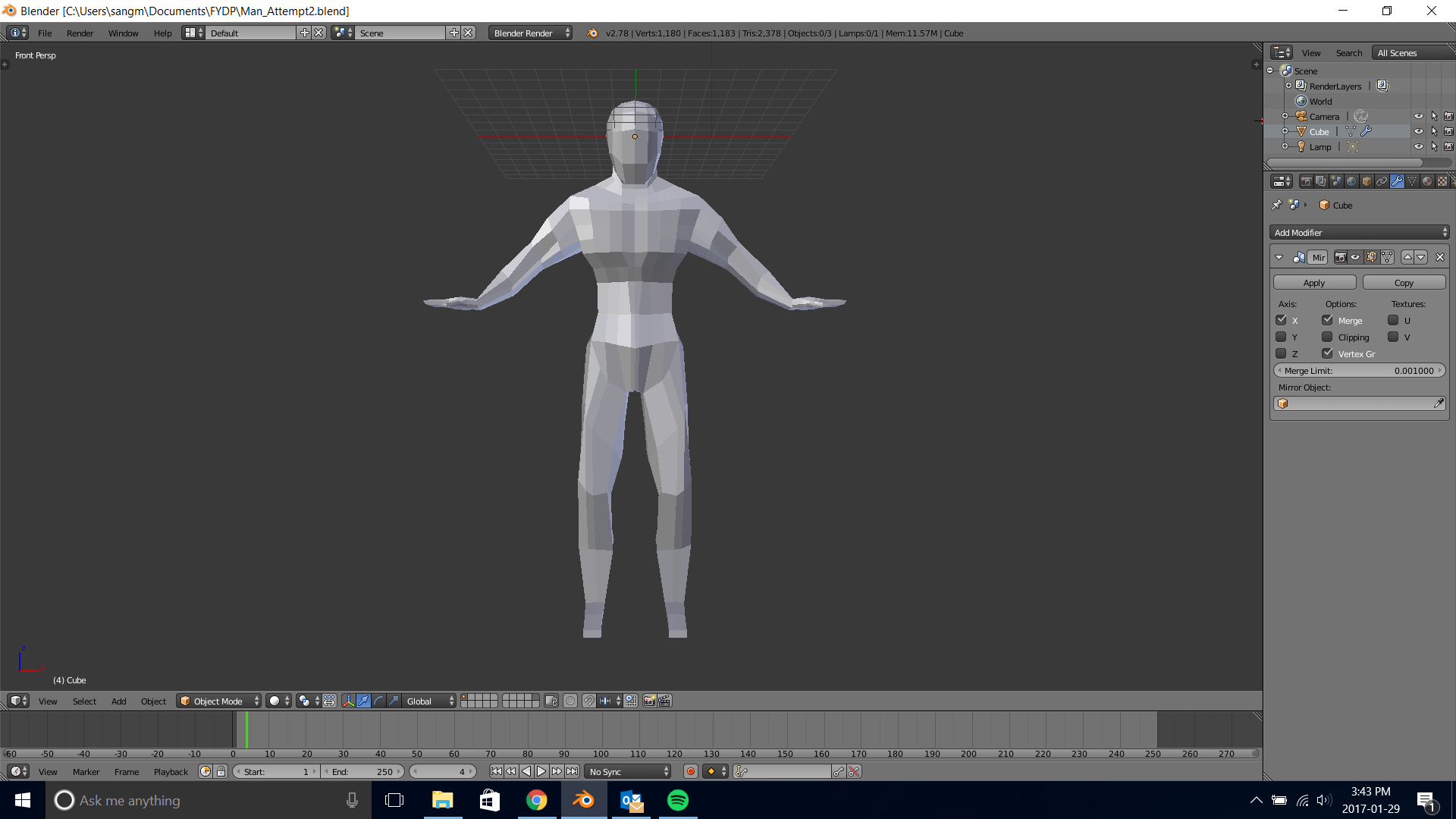

Today marks an exciting new milestone as the team completed the initial validation of using Unity 3D game engine as the main platform for the Happen virtual reality user interface. During the last few weeks, the team has been working on designing the game mechanics, modeling animated characters using Blender, and scripting interactions between game objects. The most significant progress, however, is getting Leap Motion sensor to be fully integrated with the virtual environment game prototype that we have created. Part of interaction was made possible thanks to the Leap Motion Orion SDK which includes a "User Interaction" module that allows the detected hands to hold onto a virtual object without letting the built in Unity physics engine to push the object away due to boundary interference. Although the SDK does not provide the full accurate touch callback that our virtual reality glove need, we have written a custom collision detector with spheres which tells the program exactly how deep did the user's fingers sink into the object and many other detailed parameters. With this level of information, we can then accurately control the glove stepper motor movement for force feedback, and accurately adjust the sensation intensity deliver by our TENS pads to the fingers.

The game is called Rockaway, synonymous to the beach in New York, but is actually a first person rock defense game against slow moving polygon humanoids on a futuristic spaceship. The idea is to allow the users to exercise their upper limb and hand movements by repeatedly grabbing various shaped rocks from an altar and throwing them at the humanoids to gain points. If the humanoid reaches the person, the team is planning on reducing the health of the player, although that interaction has not yet been implemented. When the rock hits the humanoid, it plays the dying animation and is removed form the scene after a few seconds. We have custom made the polygon humanoid in Blender with a slew of animations, which includes crawling up from the ground, walking, crawling forward, dying while walking, dying while crawling, and jump attack. All of which will be eventually integrated into the final prototype for the 2017 Mechatronics Symposium demonstration.

The other game objects seen around the spaceship has been sourced from BlendSwap, a website where designers freely share their Blender creations. The team was fortunate enough to find detailed robot and turrent 3D models to use in our Rockaway game. This includes the nicely designed colored stripe spherical rocks that the player uses to throw at the humanoids.

The team is already in the process of the next step in the software development, which is writing serial communication scripts in C# and C to allow the Unity game running on Windows 10 desktop to freely communicate with the Arduino microcontroller. This is essential in bridging the gap between our custom glove hardware and the Unity + Oculus + Leap Motion package.

In the coming weeks, the mechanical glove prototype will be fully manufactured and assembled, and the team hope to integrate the Rockaway game with the glove electronics by then. As one would imagine, the last step of system integration will be a challenge in many areas. The team is looking forward to the process and update this blog as new progress comes along.